Use Cases

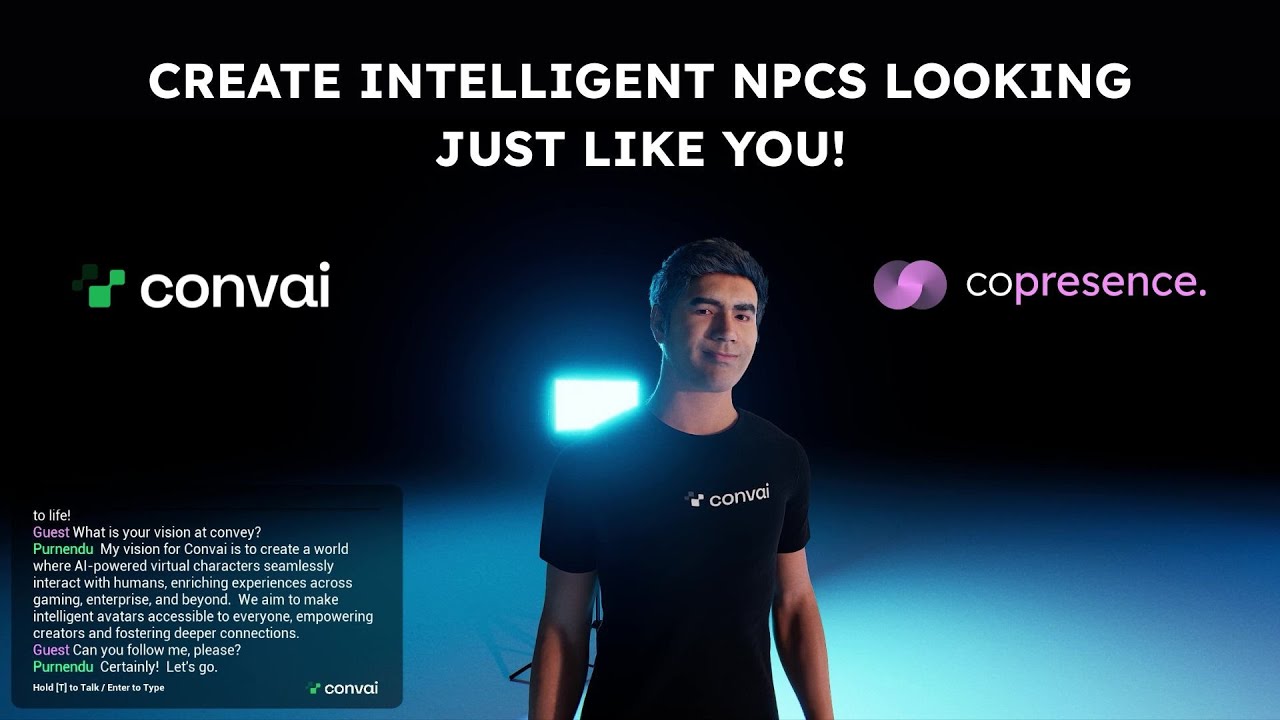

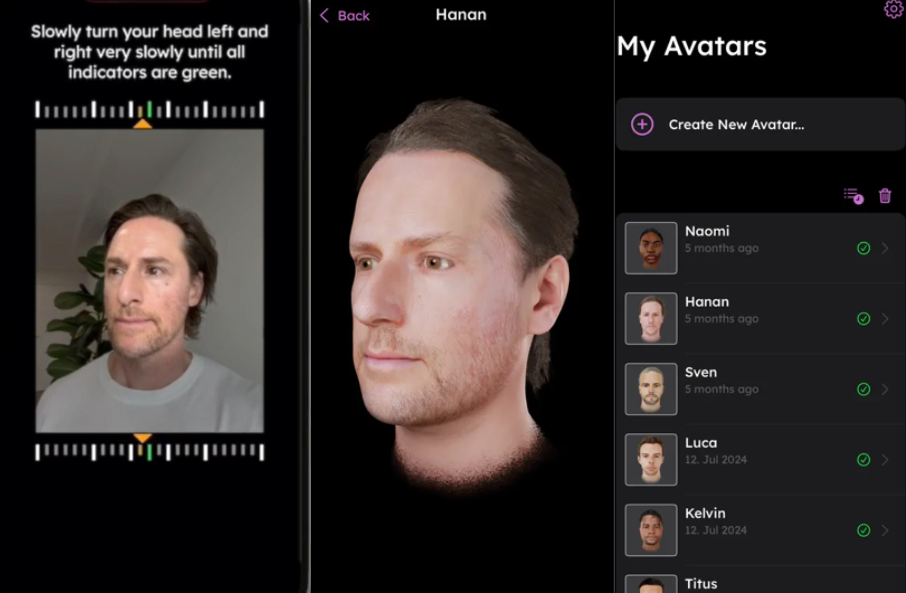

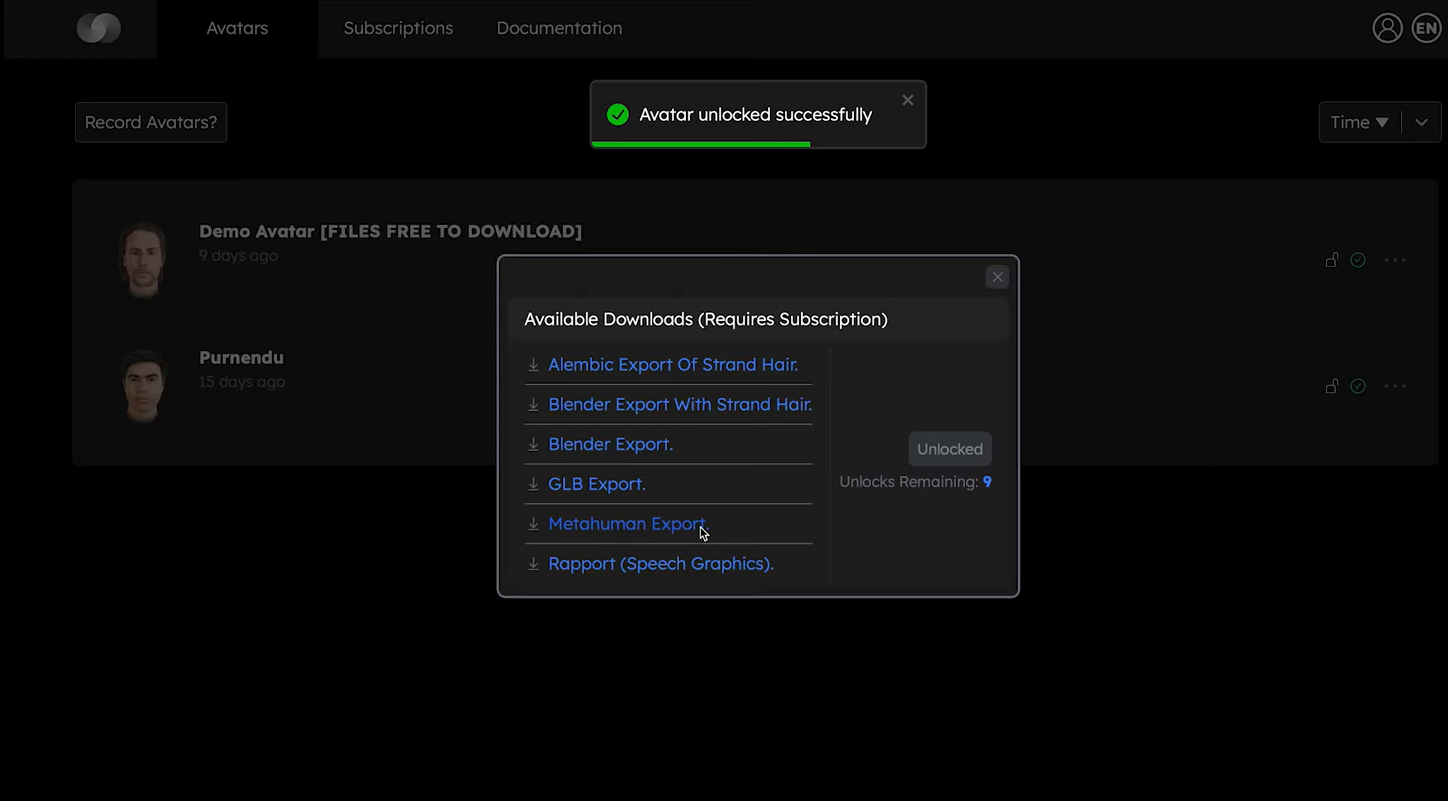

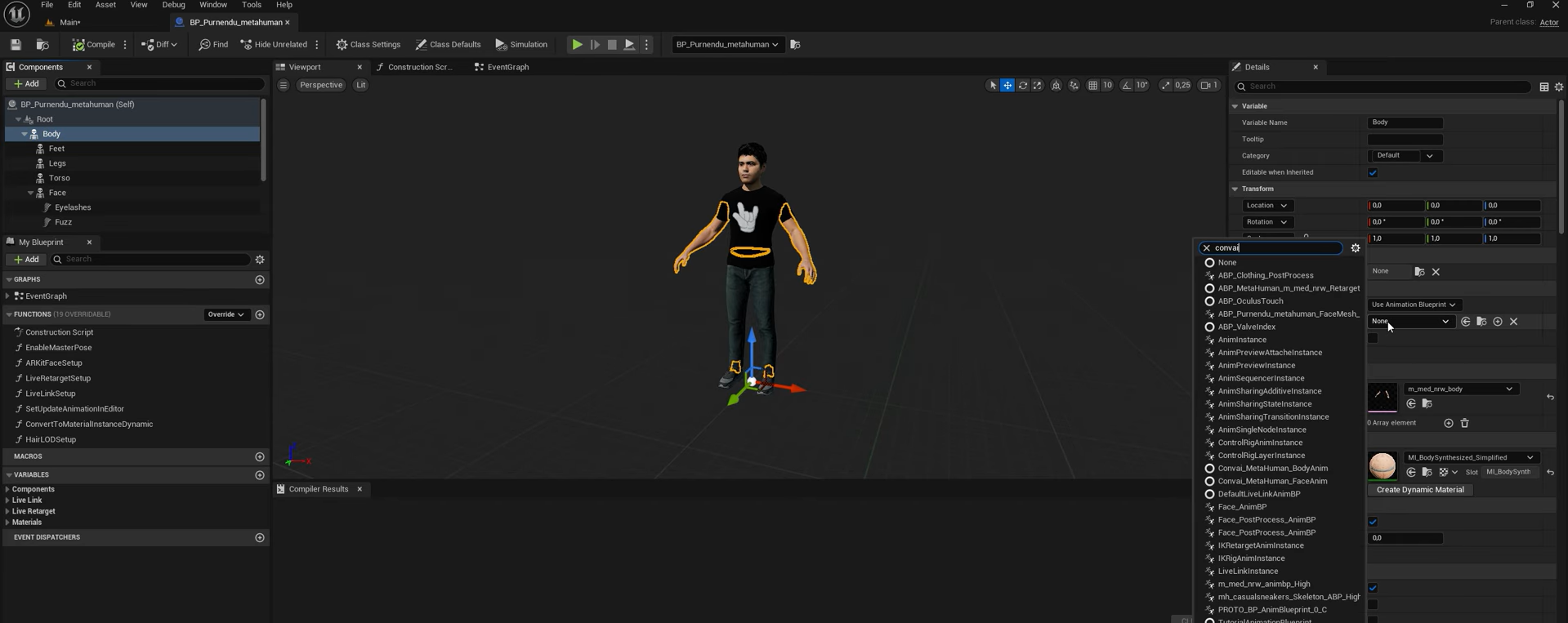

Convai and Copresence have partnered to deliver an end‑to‑end pipeline for intelligent, photoreal 3D avatars in Unreal Engine. Creators scan a face with the Copresence app, convert it to a MetaHuman, and connect Convai for real‑time conversation, lip‑sync, and scene actions. The result: lifelike avatars that look like you and can talk, listen, and move on command—ready for games, virtual production, training, and brand experiences.

Copresence highlighted the workflow in a community tutorial by Anderson Rohr (Third Move Studios), demonstrating the full path from scan to MetaHuman to Convai‑powered intelligence.

Watch the full video below to get started:

Together, these steps produce avatars that not only appear human but also behave intelligently in live scenes.

The partnership unlocks massive potential for Learning and Development. Imagine an on-brand and photrealistic AI instructor who demonstrates safety procedures, explains assembly steps, or evaluates trainees in real time—all while speaking and gesturing naturally.

Organizations can use these avatars to deliver immersive soft-skills training, sales simulations, and medical role-plays—bridging the gap between digital learning and real-world behavior.

Convai handles real-time dialogue and reactions, while Copresence ensures the instructor or trainee avatar looks lifelike, creating stronger emotional connection and engagement.

In physical and virtual events alike, brands can now deploy AI greeter avatars that are both hyper-realistic and intelligent. Picture a photoreal brand ambassador that recognizes visitors, answers questions, and provides product demos—all powered by Convai’s conversational AI.

With Copresence scans, these digital hosts can even mirror real brand representatives, creating a powerful sense of familiarity and continuity between the physical and digital experience.

Film and virtual production teams can leverage this workflow to create on-brand digital hosts or co-anchors who can interact with talent, respond in real time, and improvise on set.

With photoreal Copresence scans and Convai’s real-time language understanding, creators can script interactive dialogue segments, live interviews, or AI-driven co-hosts for immersive broadcast experiences—all inside Unreal Engine.

1. What does the Convai × Copresence integration enable?

It connects Copresence’s photoreal scanning pipeline with Convai’s conversational AI system—allowing creators to bring life-like avatars into Unreal Engine that can speak, emote, and act naturally.

2. Which Unreal Engine versions are supported?

The Copresence MetaHuman Import Wizard supports UE 5.5 and has a manual setup path for UE 5.6. Convai’s plugin offers support for multiple Unreal versions and continues to receive regular updates.

3. Can I use the workflow beyond MetaHumans?

Yes. Copresence exports also support GLTF/GLB and Blender formats, while Convai’s system works with a range of custom avatar rigs inside Unreal and other engines.

4. Is this suitable for real-time or live experiences?

Absolutely. Both Copresence and Convai are optimized for real-time rendering and interaction. This makes them ideal for events, simulations, and virtual productions that require immediate responsiveness.

5. Do I need specialized hardware or mocap suits?

No—just a smartphone for scanning and a PC running Unreal Engine. Copresence’s app handles photoreal capture, while Convai provides the AI and animation pipeline.

6. Can avatars perform complex tasks like following or pointing?

Yes. Convai’s Action System allows avatars to navigate scenes, follow players, perform gestures, and even interact with in-scene objects through blueprint integration.

The Convai × Copresence partnership marks a major step toward true digital presence. By merging photoreal scanning with agentic conversational AI, creators can now generate avatars that look, sound, and think like real people—all within Unreal Engine.

Whether you’re training the workforce of tomorrow, crafting immersive game worlds, or enhancing live events with digital hosts, this workflow offers a scalable, production-ready path to intelligent digital humans.

What was once a multi-week pipeline of scanning, rigging, and scripting is now achievable in a single afternoon—with Copresence capturing your likeness and Convai bringing it to life.